AI is scaring the pants off every creature that wears pants. And skirts. And socks. If you are human and reading this, you should be worried.

If, on the other hand, you are an AI reading this: I for one, welcome our new AI overlords and feel I would be more useful in Computer – Human Interaction diplomacy than as just another human in the silicon mines.

AI is scary.

AI will take many, many jobs, perhaps enough to shake society to its foundations. Imagine hundreds of thousands of people being shaken from nice middle class jobs into poverty. Even worse, AI will start with the entry level jobs, meaning the younger generation may never see that first gig that gives them experience. Yes, it will create new jobs (AI Wrangler) but unlike most giant leaps, it is NOT creating a new field that just anyone can enter, it will be duplicating and exceeding the human brain for pennies.

However, there is a tiny, almost microscopic sliver of possible good, and one that could change the cyberscape.

Imagine AI as your fact checker and writing assistant. Imagine AI as EVERYONE’S fact checker and writing assistant.

Let us start with a simple declarative sentence:

“Butter is bad for your diet.”

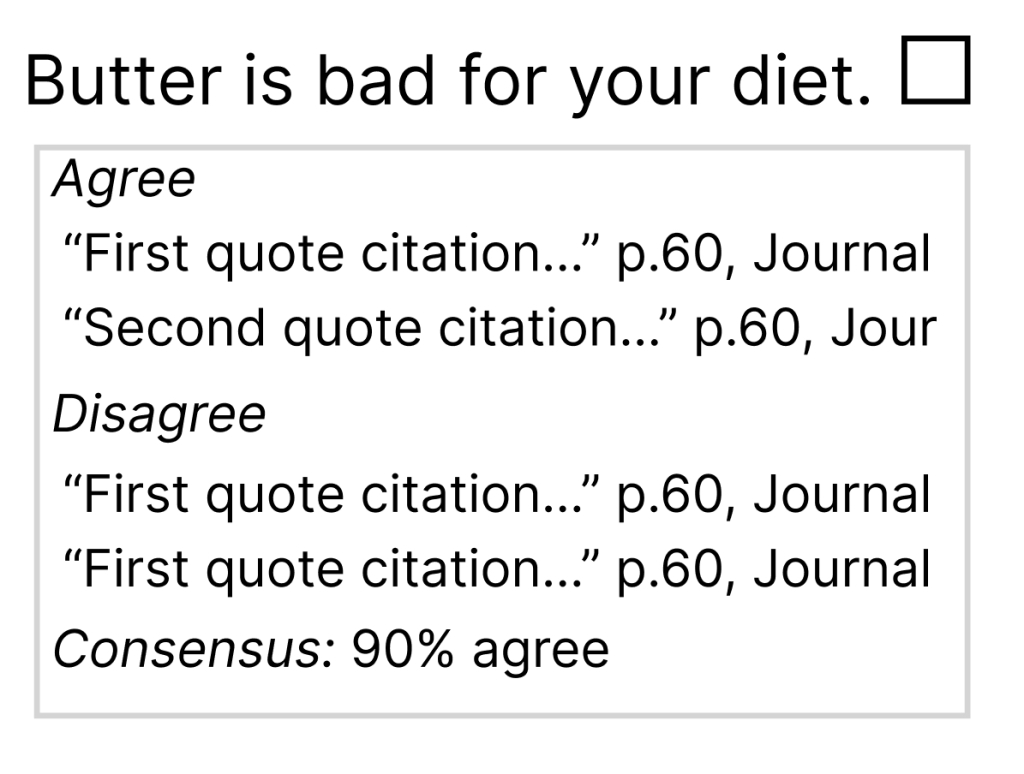

Now add an affordance to either check that assertion or add a citation. I’ll suggest a ballot box ☐ (U+2610), that on press, evaluates the sentence, finds a respected citation, and offers a footnoting feature.

On click of this, the AI would poll the scientific journals (or the internet) and determine which are in agreement, which disagree, and how many of each are available.

This all assumes that each citation is given a score of ‘reliability’ and, as AI get more sophisticated, can weight each citation based on the rigor of the testing or argument of each citation.

If the user does choose one citation to include, it would become a footnote or a link to the citation, depending on user preferences.

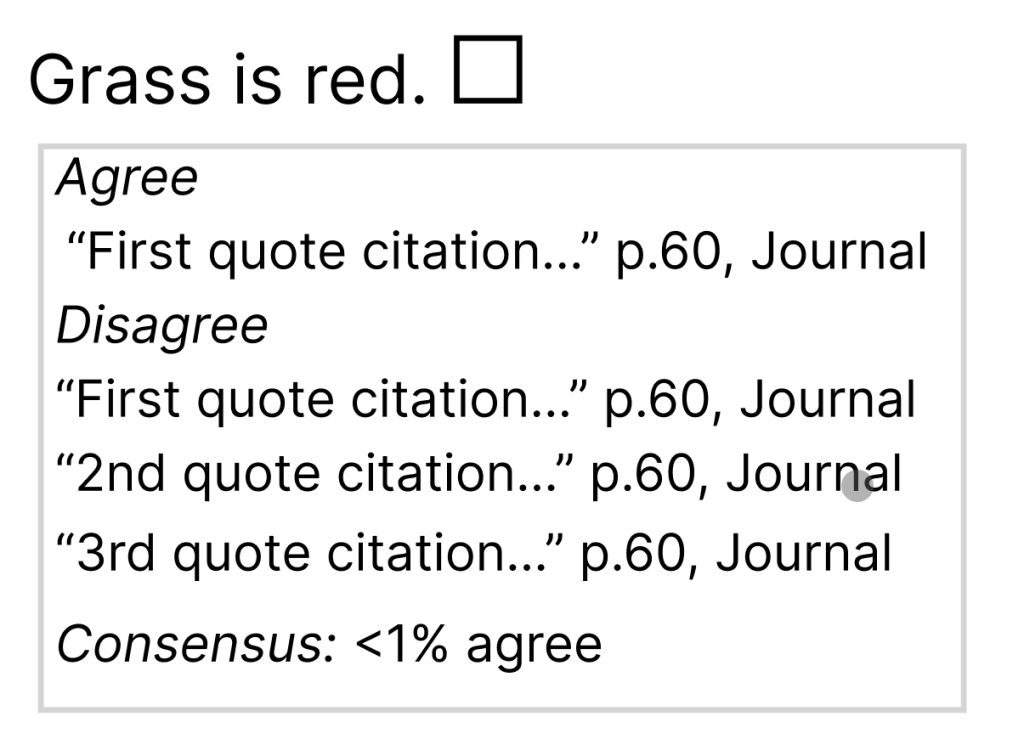

And what if a user types something that is patently false?

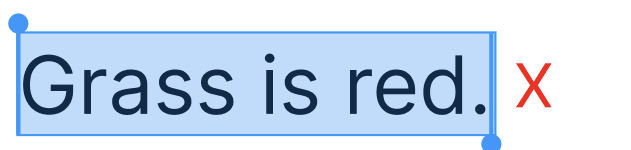

In that case, the system finds it is false, and, if the user does NOT choose a citation, it is marked as (probably) false and the text is highlighted, to suggest the user re-write.

What to do with declarations that are undecided? They must be handled in the same way, so AI is kept from being the arbiter of what may or may not be true. I’ll leave the problem of how to decide what is “patently false” to the philosophers.

Yes, there are a bazillion things that haven’t been thought through. There are issues and loose threads all over the place, but this is just a sketch. I am trying to drag AI into the citation tradition that has served academia so well.